We’re all familiar with the checkered history of the Whorf Hypothesis; the idea that a person’s worldview and cognition are limited by their language. At first it was widely accepted, to the point it evolved into an urban legend about Eskimo words for snow, allowing them to conceptualize it differently than English speakers. Eventually this was recognized as nonsense. It was easy in practice to translate any idea of snow between languages without either language speaker having trouble conceptualizing and agreeing on the meanings. Then, when Whorfianism seemed on the decline, scientists discovered real, testable cases where natural language affects the brain’s development and capabilities. The best known is in languages lacking words for relative direction such as left and right, having instead only absolute direction such as east and west. In this case, the brain develops the ability to maintain constant awareness of absolute cardinal positioning in a way relative direction speakers are incapable of. Lera Boroditsky, a Standord University researcher in the fields of neuroscience and symbolic systems, wanted to find out if these cardinal oriented languages affect the brain’s temporal capacities as well. She’s done a fascinated piece for the Edge blog on this subject. Here’s an excerpt:

“I gave people a really simple task. I would give them a set of cards, and the cards might show a temporal progression, like my grandfather at different ages from when he was a boy to when he’s an old man. I would shuffle them, give them to the person, and say “Lay these out on the ground so that they’re in the correct order.” If you ask English speakers to do this, they will lay the cards out from left to right. And it doesn’t matter which way the English speaker is facing. So if you’re facing north or south or east or west, the cards will always go left to right. Time seems to go from left to right with respect to our bodies. If you ask Hebrew speakers to do this, or Arabic speakers, they’re much more likely to lay the cards out from right to left. That suggests that something about the writing direction in a language matters in how we imagine time. But nonetheless, time is laid out with respect to the body.”

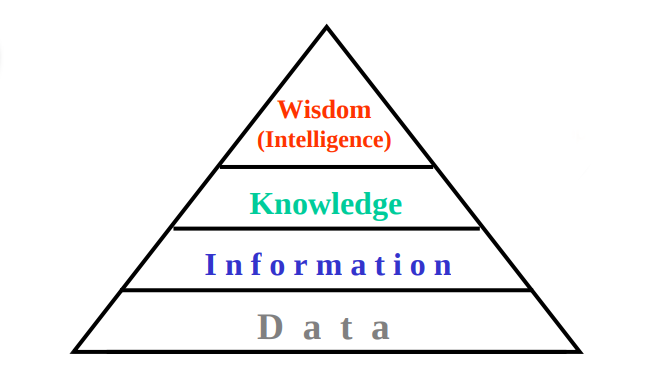

The results she got were unlike any system of temporal organization seen before. Instead of organizing time left to right or in some other system relative to the speaker’s body, they organized it in an absolute coordinate system regardless of which way they were oriented when they began the experiment. These results prompted Lera to look for other testable differences in cognition among more conventional languages like English, Russian, and Hebrew. She talks about various examples such as finding that kids who speak genderless languages take longer to understand the differences between the sexes. There’s also an amusing aside about language and causality based on the incident in which Dick Cheney shoots a hunting partner in the face. Read the full document, Encapsulated Universes over at the Edge, which includes a video. Or listen to the audio above via soundcloud. Either way it’s an interesting reminder of the important relation between intelligence and language.